-

Print

-

DarkLight

-

PDF

Kea Performance Tests - 1.4.0 vs. 1.5.0

Introduction

This report presents a series of measurements on Kea versions 1.4.0 and 1.5.0, taken using ISC's performance laboratory (perflab). The measurements were carried out in February and March 2019. The results presented here for Kea 1.5.0 supersede those presented in the preliminary report, as the issues affecting measurement in that report were corrected.

Configuration

- perfdhcp and the Kea server were each running on their own machine. Each machine was a Dell R430 server, with a 128 GB RAM, single Xeon CPU E5-2680 v3 @ 2.50GHz and an Intel X710 10 Gbps NIC for test traffic. The CPU is 12-core, but hyperthreading was disabled (this was the result of experiments to improve the repeatability of results). Persistent storage is provided by hard disk drives (not solid state disks) connected via a RAID controller. The systems were running Fedora 27.

- Kea was set up with a single subnet. All configurations (both Kea4 and Kea6) had a single pool of 2^24 (16777216) addresses. Since the maximum lease rate observed is about 8,000 leases/second and each test run for 30 seconds, this is more than sufficient to ensure that the pool is never filled during a test (see next section for the definition of a test).

- Shared subnets were not used.

- No host reservations were defined, either in the configuration file or in the database.

- For the tests with the database backends, both Kea and the database server were running on the same machine. (Performance may be very different if the database and Kea are not running on the same host. Also, the overall performance may be much higher if more than one Kea instance is connected to the same database. In these tests though, only a single instance of Kea was running.)

- Kea 1.5.0 introduced the congestion handling feature. For the measurements reported here, it was disabled.

Method

Tests were run against Kea 1.4.0 (git commit adecd0b6b6f2967f3e6d4c2258e59af2b0767168) and 1.5.0 (git commit a0d3d9729b506eaa4674e5bd8b25b87d84d2492d).

- The server was started before each measurement run began and stopped after it ended. Within each run, ten tests were carried out, each test consisting of running perfdhcp for 30 seconds and recording the results. Kea was not restarted between tests in a run. Three measurement runs were done for each request rate, the mean of all the results being used as the lease rate. (Note: owing to a bug in the rate setting code, only two measurement runs were done for some of the very low request rates (well below the value of real interest, the rate at which the Kea server was saturated): this was fixed and three measurement runs were carried out for higher rates. This should not affect the results other than reducing the the confidence level of the error bars at those rates.)

- The MySQL, PostgreSQL and Cassandra databases were started before a run and stopped afterwards. After being started, previous scheme were deleted and the database schema set up from scratch. Before each test in a run, the lease tables were cleared. With the memfile backend, the lease file (if present) was deleted before each run, so the first test started off with an empty lease database. Between each test, the in-memory database was cleared using a "lease4-wipe" or "lease6-wipe" command.

- perfdhcp was set up to simulate one million clients.

Specific points to note:

- The rate at which packets were being sent to the server was calculated from the perfdhcp output. (The version of perfdhcp used in the tests has a bug by which it sends requests at a rate below that specified on the command line. It does however log the number of packets sent during the test, and this is the information used to calculate the packet rate.)

- When processing the data, the first test in each run was ignored. It has been found in the past that in some cases, the first test within a run can have a lease rate significantly different that reported for other tests. It is conjectured that this is due to the Kea server and/or database server doing the initial allocation of appropriate data structures, but the reason is not known for certain and needs to be investigated.

Results

For each combination of Kea protocol (DHCPv4 and DHCPv6) and backend, the following graphs are presented:

- Lease rate (the rate at which the server hands out leases, measured in leases/second) against the request rate (DISCOVER packets/second for DHCPv4, SOLICIT packets/second for DHCPv6). This is effectively the performance of the server. As noted above, the request rate is calculated from the number of packets sent by perfdhcp during the test.

- Failed requests as a percentage of requests sent as a function of the request rate. In other words, the percentage of DISCOVER or SOLICIT packets sent to the server that did not result in a lease being allocated.

- Initial exchange drop fraction as a function of the request rate. This is the percentage of packets in the first handshake that got no response, i.e. for DHCPv4, the percentage of DISCOVER packets that did not result in an OFFER being received. (For DHCPv6, this is the number of SOLICIT packets that did not result in a received ADVERTISE.)

- Confirmation exchange drop fraction as a function of the request rate. This is the percentage of packets in the second handshake that got no response, i.e. for DHCPv4, the percentage of REQUEST packets that did not result in an ACK being received. (For DHCPv6, this is the number of REQUEST packets that did not result in a received REPLY.)

- Initial exchange round-trip time as a function of the request rate. The interval in milliseconds between a sending a DISCOVER or SOLICIT and receiving the corresponding OFFER or ADVERTISE.

- Confirmation exchange round-trip time as a function of the request rate. The interval in milliseconds between a sending a REQUEST and receiving the corresponding ACK or REPLY.

(In the illustrations that follow, these graphs are in order of top to bottom, left to right.)

To make the graphs smoother, the request rate in the graphs is binned in intervals of 10 packets/second for the database configurations, and in intervals of 100 packets/second for the memfile configurations. In each bin the mean of all the tests in that bin is plotted; the band around the line represents the 95% confidence interval.

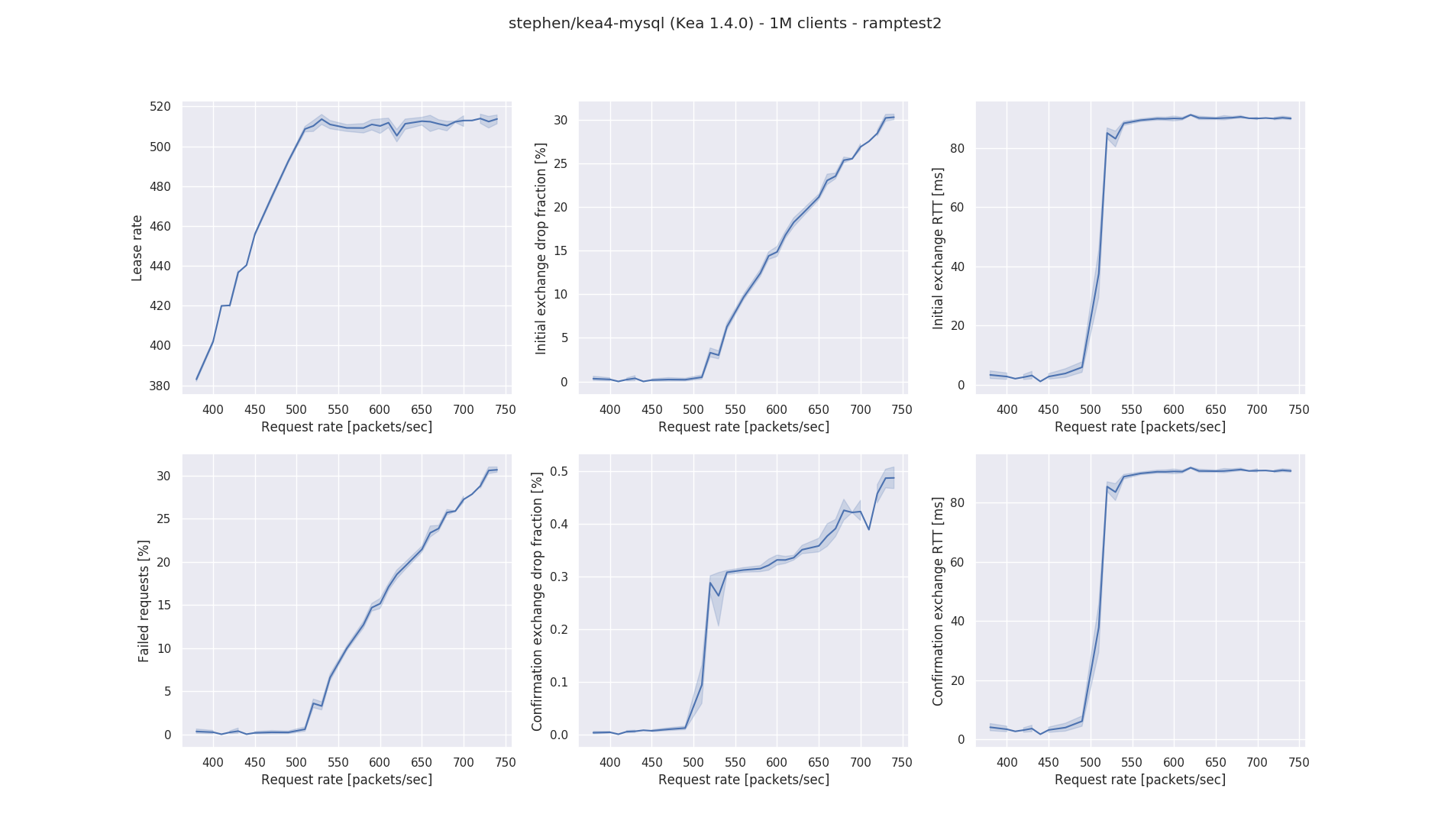

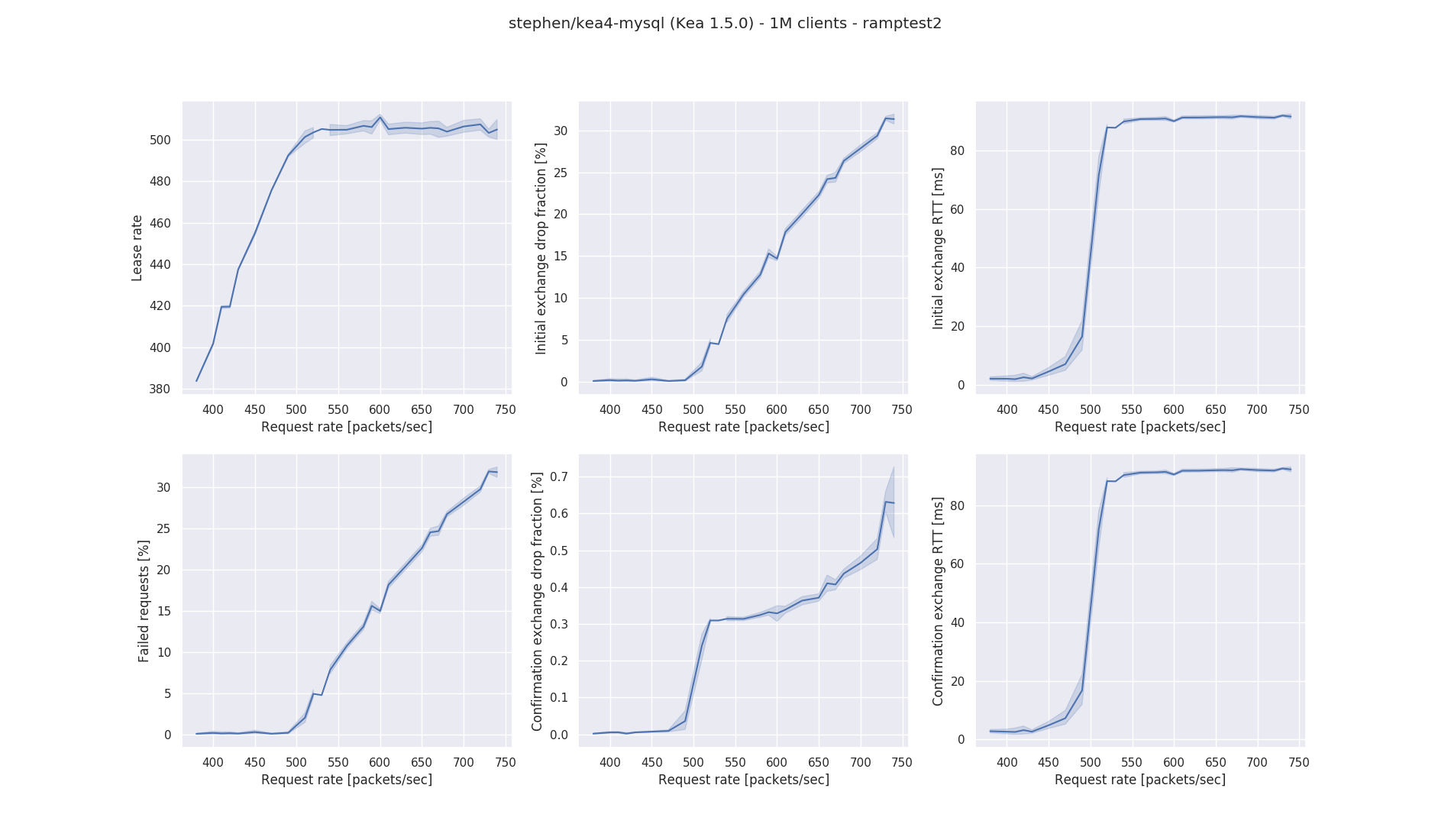

MySQL - DHCPv4

This section presents results for Kea/DHCPv4 running with a MySQL backend, version 5.7.21. As noted above, the database was initialized from scratch at the start of each run, and cleared between tests.

Here, the lease rate increases more or less linearly with increasing request rate up to about 500 leases/second. (The binning of different measurements into buckets of 10 requests/second is responsible for the apparent contradiction at some points on the graph of the lease rate being slightly higher than the request rate.) Since there is virtually no packet loss in this region, the linear nature of the curve is to be expected - Kea is servicing packets as fast as they are being received.

In both graphs, the request rate approaches 500 requests/second, the number of failed requests starts increasing and the lease rate flattens off, leading to a maximum performance of about 510 leases/second. The bulk of the packets dropped are the initial DISCOVER packets. Interestingly, the fraction of the REQUEST packets sent that do not receive a reply is much lower than the fraction of DISCOVER packets not receiving a response. This may be due to the fact that with a DISCOVER, the candidate address has to be selected and so Kea has to go through the full allocation procedures. With a REQUEST, Kea just has to check that the address is still free (which, with the size of the pools it will be) and assign it. In other words, REQUEST processing is usually much faster than DISCOVER processing (although this explanation does require that DISCOVER and REQUEST packets arrive in separate bursts.) The "knee" on the "Confirmation Exchange Drop fraction" graph that plots the fraction of OFFER packets lost is odd, although it appears to occur at the point at which the system starts experiencing significant overall packet loss. The absolute fraction of OFFER packets lost is very small though - when leases are not allocated the reason is mostly due to the initial DISCOVER/OFFER handshake not completing.

The final pair of graphs in each set show that the round-trip time of packets sharply increases as Kea reaches saturation. Again, this is expected. While Kea is processing requests faster than they arrive, a received packet will get processed almost immediately. When Kea starts falling behind, packets will be queued in the system's receive buffer and so will have to wait before being processed. At saturation, the queue will be full all the time, so every packet will have to wait for Kea to process a queue-length's worth of packets before it is processed, which explains the relatively constant RTT at high packet rates. The transition occurs where Kea is processing packets as fast as they arrive.

The "knee" in the lease rate curve appears to be the point at which the packet loss rate is around 2.5%: for lack of a better definition, the lease rate at which the packet loss rate is this value will be taken as the performance of Kea in this configuration. With this definition, the performance of Kea 1.4.0 is about 510 leases/second and that of Kea 1.5.0 is about 504 leases/second.

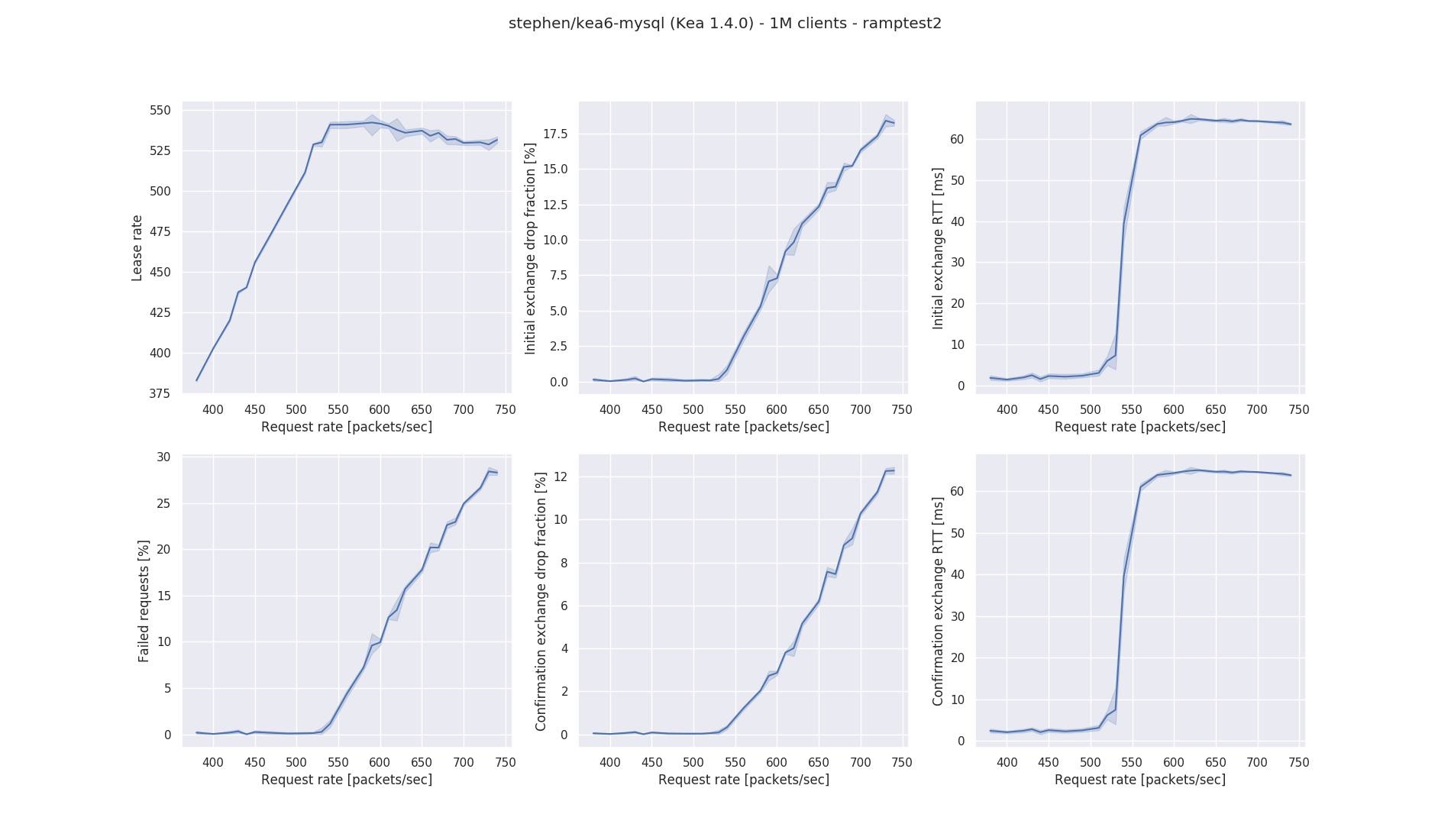

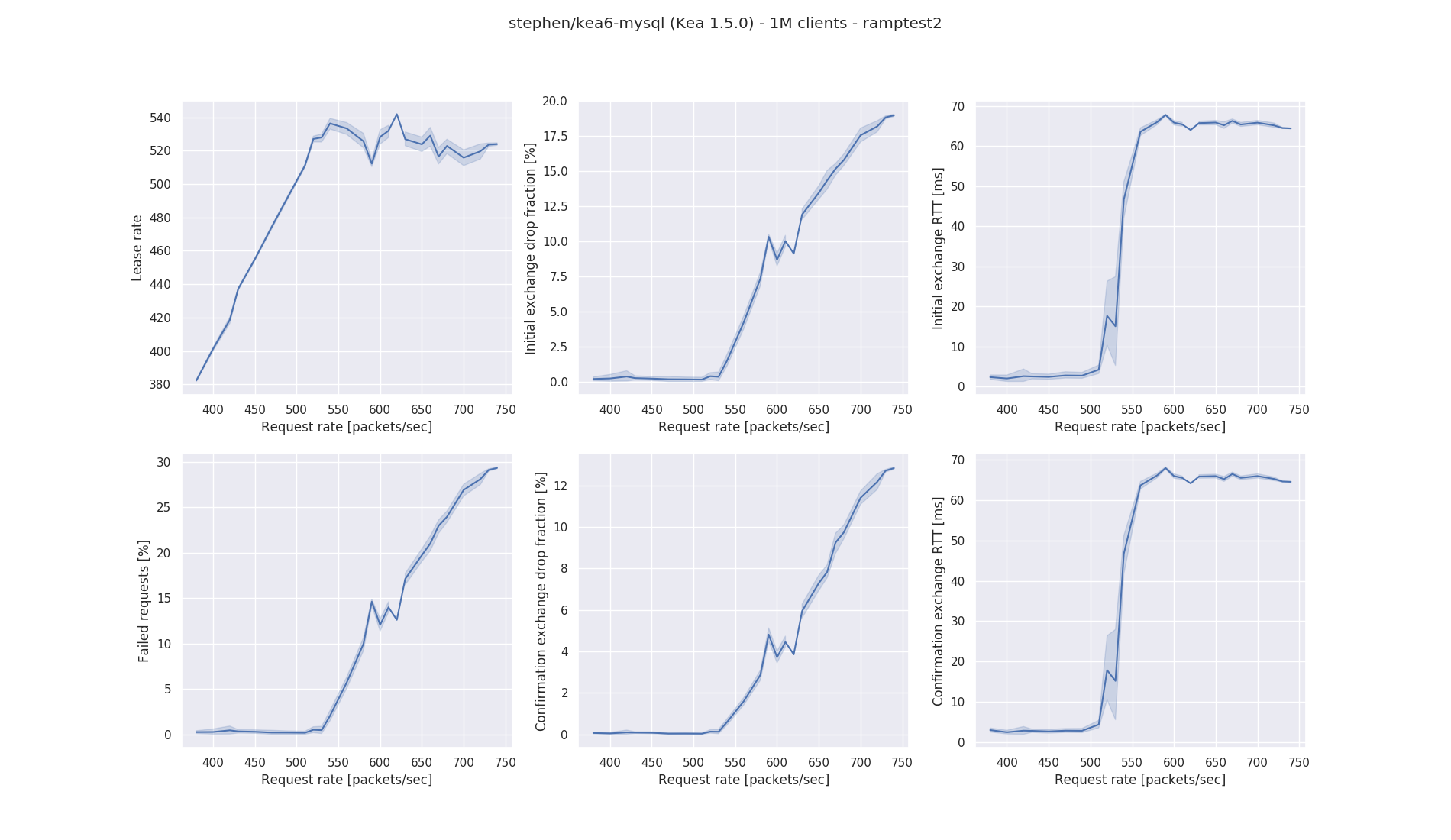

MySQL - DHCPv6

This section presents results for Kea/DHCPv6 running with a MySQL backend, version 5.7.21. As noted above, the database was initialized from scratch at the start of each run, and cleared between tests.

The graphs for Kea/DHCPv6 are similar to those for Kea/DHCPv4, although there are a number of differences.

- The peak lease rate is higher, reaching around 530-540 leases/sec (as compared to Kea/DHCPv4's peak value of about 500 to 510 leases/second).

- The behavior once the request rate increases beyond the peak is different: instead of the lease rate flattening out, the lease rate starts to decline under higher load.

- In the V4 case, the bulk of the requests that did not result in a lease were losses in the initial packet exchange. In the V6 case, about 60% of the failed requests were due to losses in the initial packet exchange and 40% were due to losses in the confirmation exchange.

- The reason for the glitch in the packet loss graphs for Kea 1.5.0 around a request rate of 600/second is not understood. It is unlikely to have been an environmental factor, as the irregularitrspersed with covers several measurement points, and the measurements for Kea 1.5.0 were interspersed with measurements for other configurations, including those for Kea 1.4.0.

Using the definition of peak performance as that at which the percentage of failed requests is 2.5%, we can see that the performance for Kea 1.4.0 is about 540 leases/second and that for Kea 1.5.0 is about 537 leases/second.

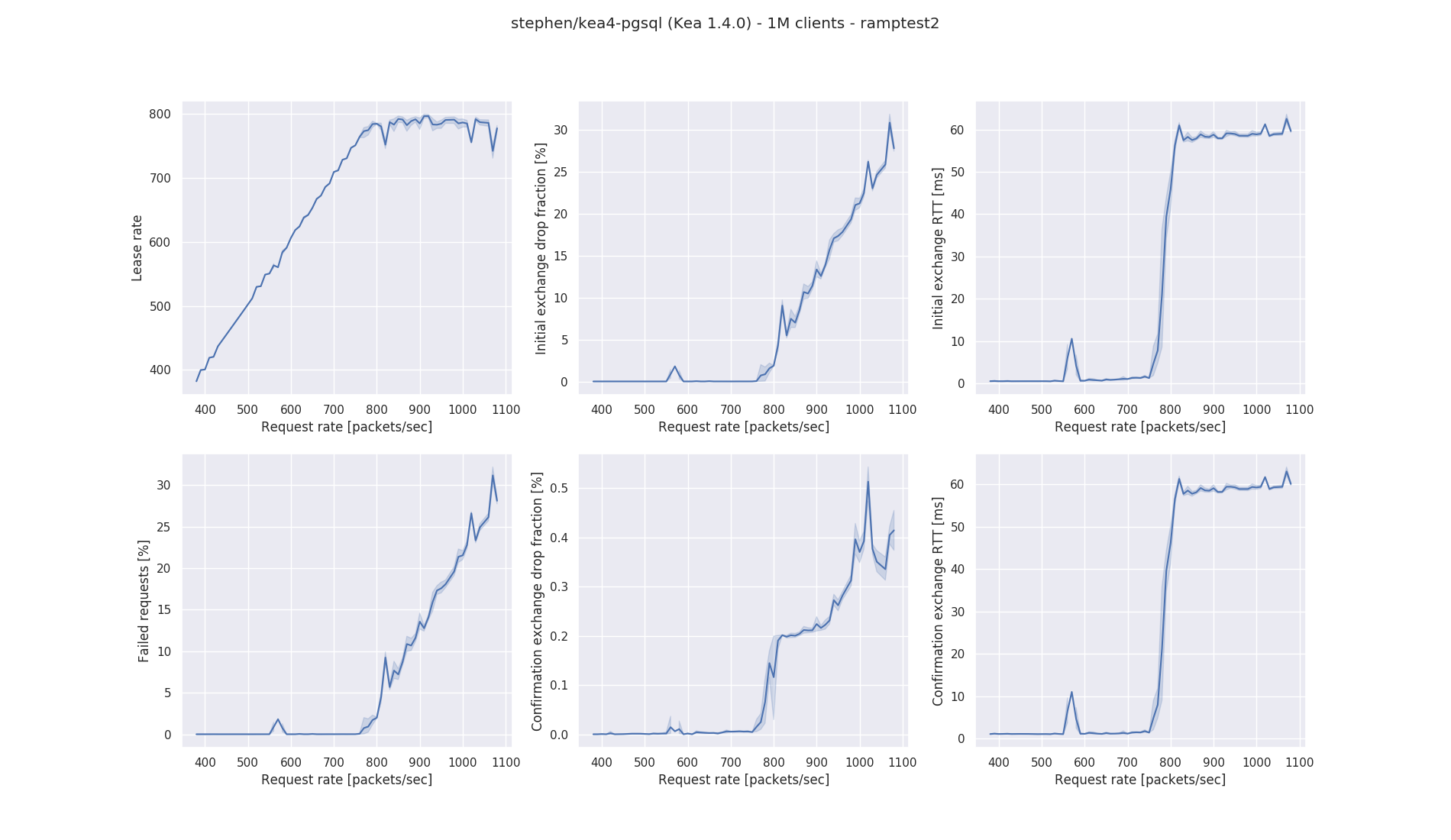

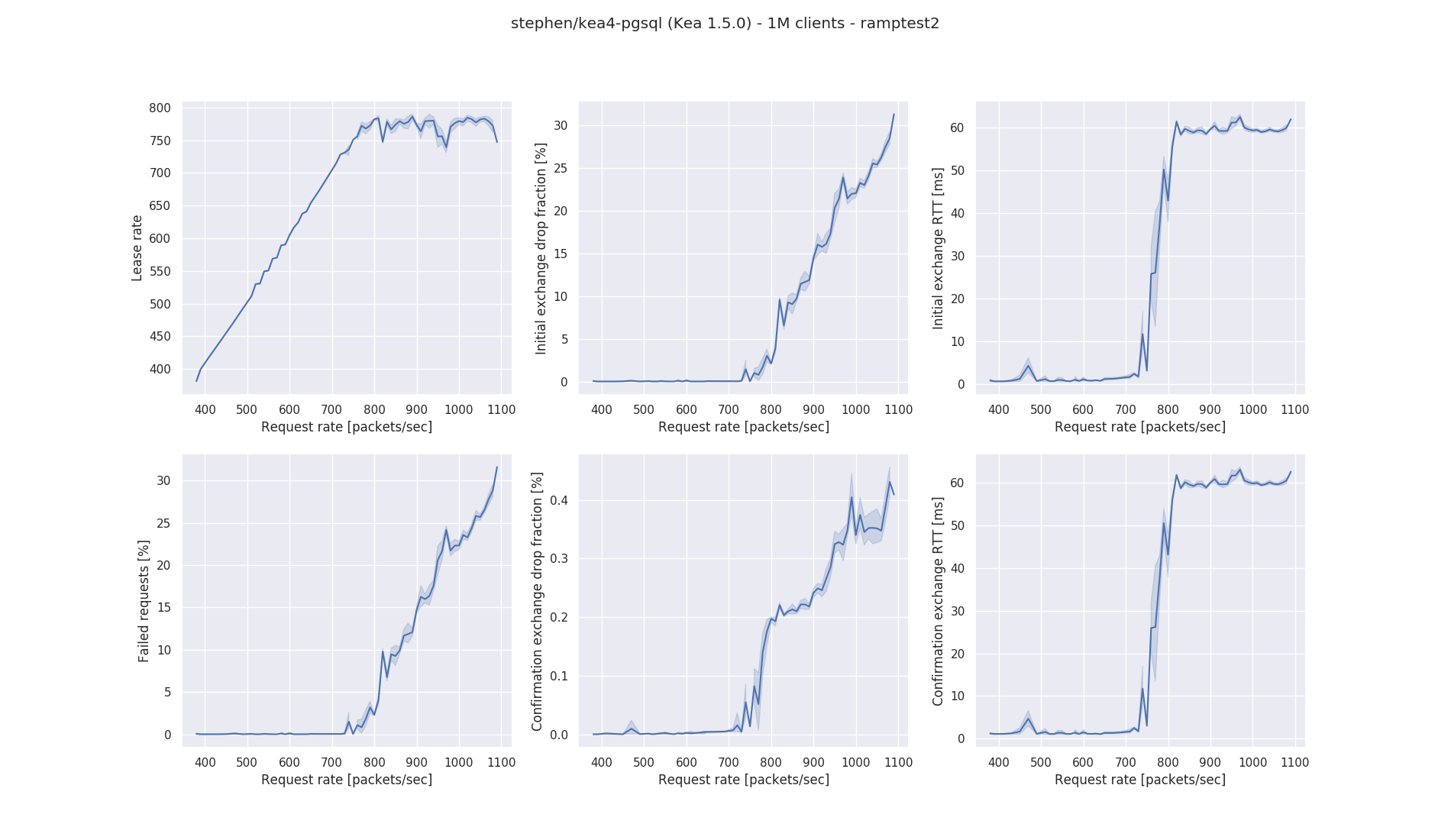

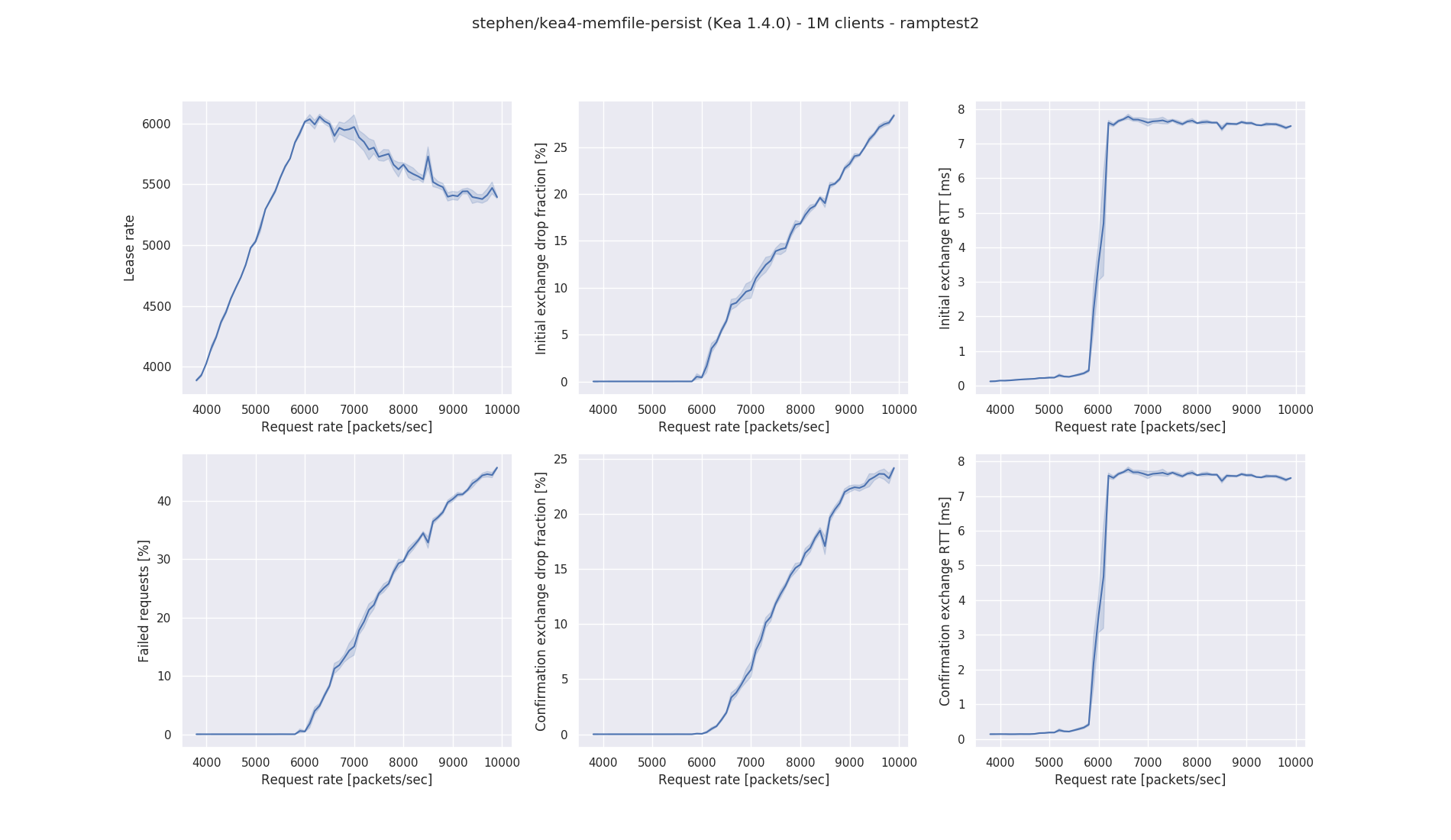

PostgreSQL - DHCPv4

This section presents results for Kea/DHCPv4 running with a PostgreSQL backend, version 9.6.6. As mentioned earlier, the database was initialized from scratch at the start of each run and cleared between tests.

The performance of Kea/DHCPv4 with a PostgreSQL backend has similarities with measurements for a MySQL backend, although the lease rate is higher:

- The performance rises more or less linearly with request rate then levels off as the number of dropped packets increases.

- Most of the packet loss occurs in the initial DISCOVER/OFFER exchange.

- There is still the curious "knee" on the packet loss curve for the confirmatory exchange.

Using the definition above of peak performance as that with a packet loss rate of 2.5%, the graphs indicate that the performance of Kea 1.4.0 is about 785 leases/second and that for Kea 1.5.0 about 780 leases/second.

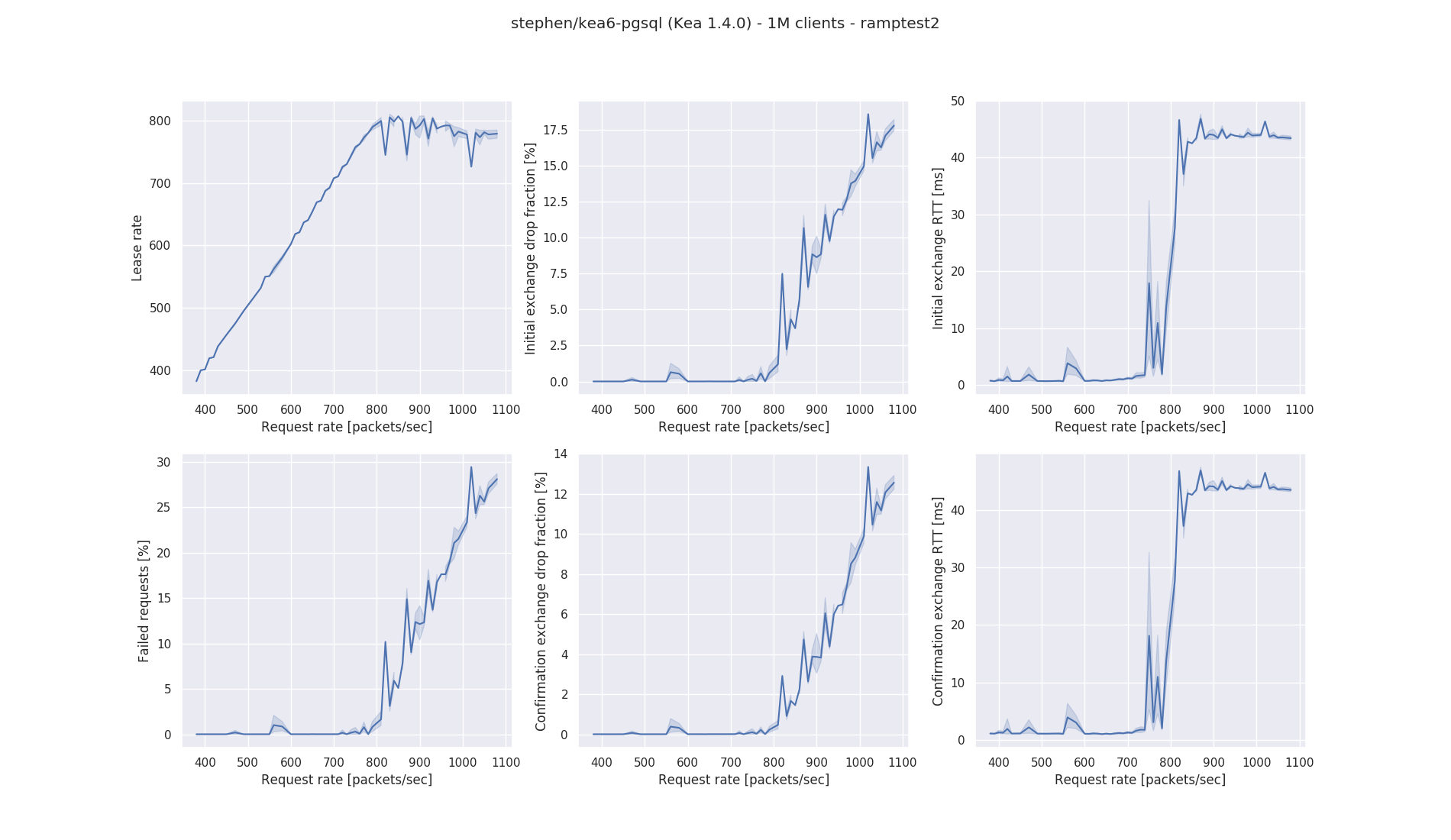

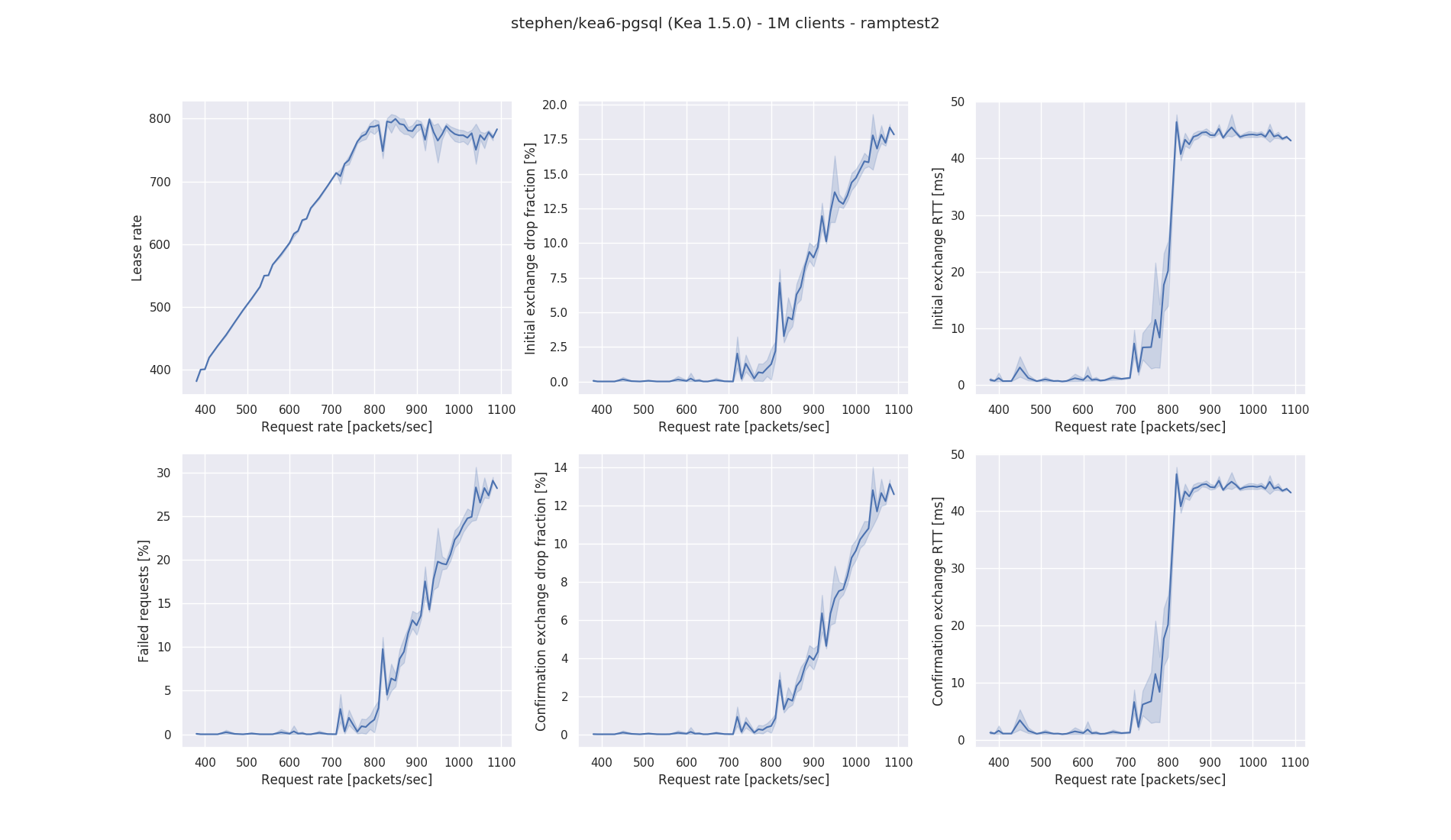

PostgreSQL - DHCPv6

This section presents results for Kea/DHCPv6 running with a PostgreSQL backend, version 9.6.6. As mentioned earlier, the database was initialized from scratch at the start of each run, and cleared between tests.

The characteristics of Kea/DHCPv6 running with a PostgreSQL backend are similar to that of Kea/DHCPv6 running with a MySQL backend:

- The performance of Kea/DHCPv6 with a PostgreSQL backend is better than that of Kea/DHCPv4 with the same backend.

- There is evidence of a drop in performance as the request rate goes above saturation (unlike Kea/DHCPv4 where the performance curve is relatively flat).

- About 60% of requests that don't result in a lease are due to packet loss in the initial exchange; the remaining 40% are due to packet loss in the confirmation exchange. (This is to be contrasted with the Kea/DHCPv4 case, where the bulk of the lost requests occur during the initial exchange).

With the previous definition of peak performance, the graphs indicate that the performance of Kea 1.4.0 is about 800 leases/second and that for Kea 1.5.0 marginally lower at about 790 leases/second.

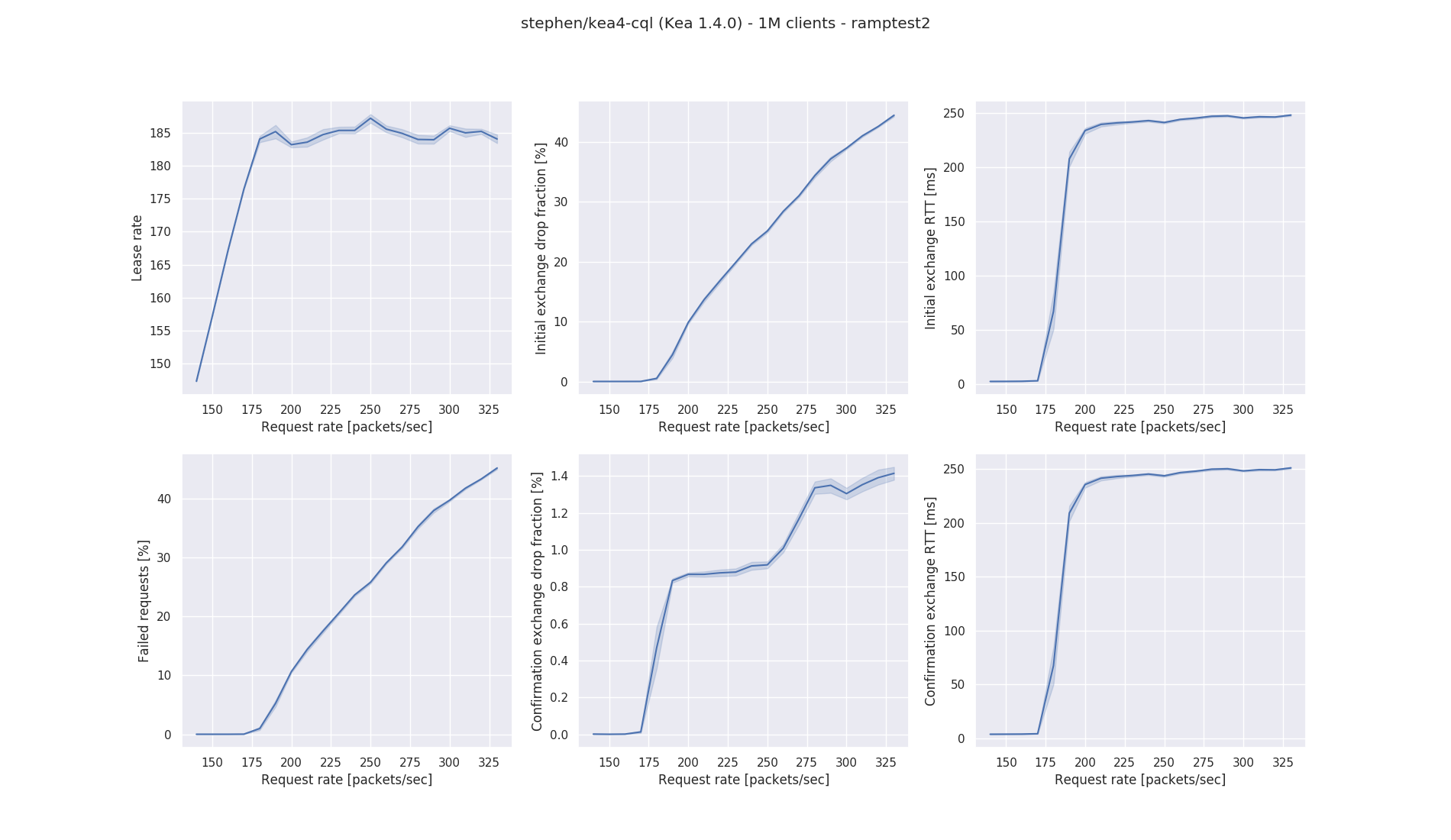

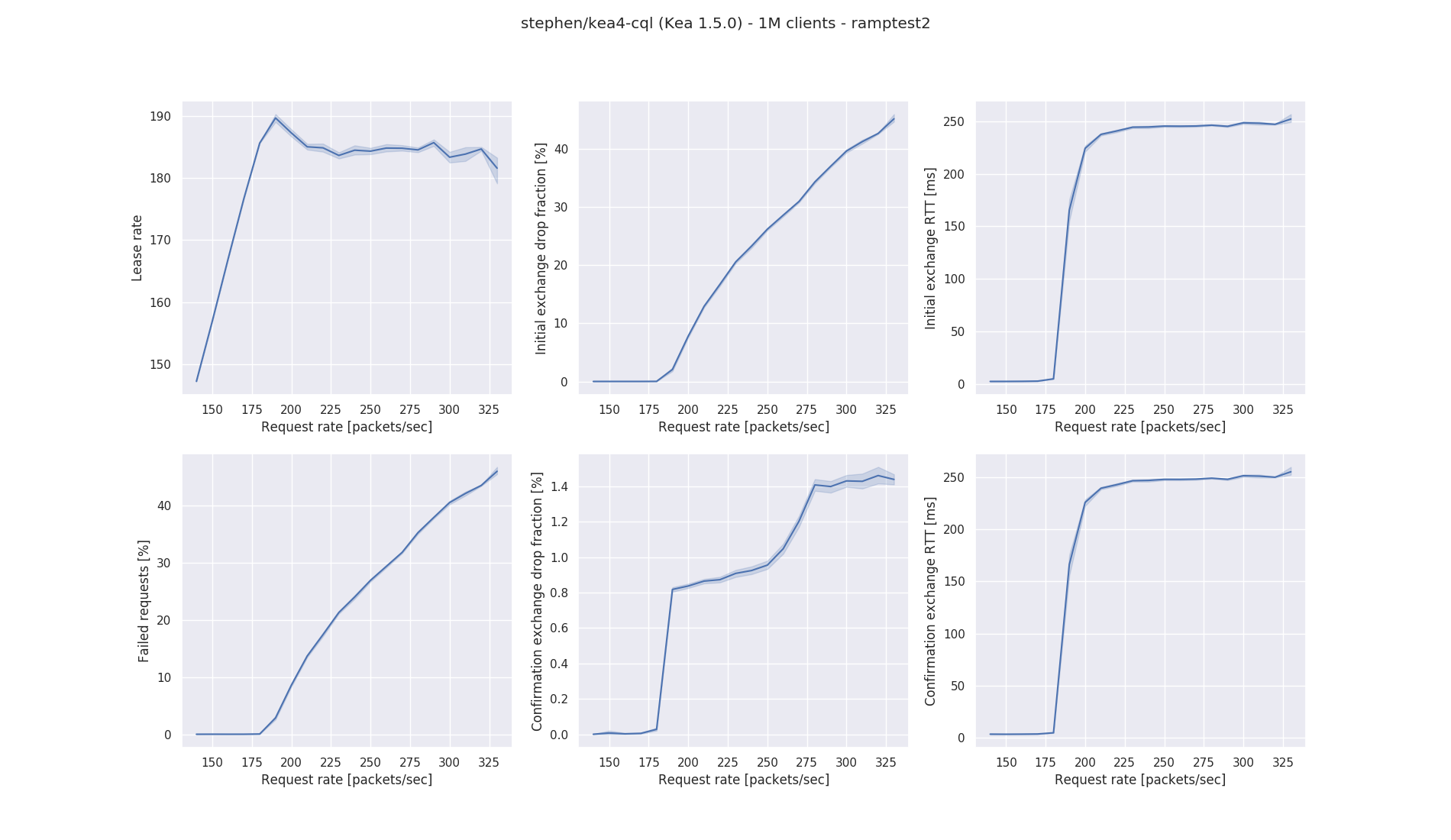

Cassandra - DHCPv4

This section presents results for Kea running with a Cassandra backend, version 3.11.3. (This was running with the ccp-driver V2.9 and Java 8.) Like the other databases, the database was initialized from scratch at the start of each run, and cleared between tests.

The results of Kea/DHCPv4 with a Cassandra backend are very similar to the results with the other database backends:

- The lease rate rises to a maximum then is more or less constant. The graph for Kea 1.5.0 is odd in that the lease rate rises to a definite maximum before falling that flattening off.

- The bulk of the packet loss occurs during the initial exchange.

- There is a "knee" on the packet loss graph for the confirmation exchange.

The definition of peak performance suggests a lease rate for Kea 1.4.0 of 185 leases/second and that for Kea 1.5.0 of about 190 leases/second.

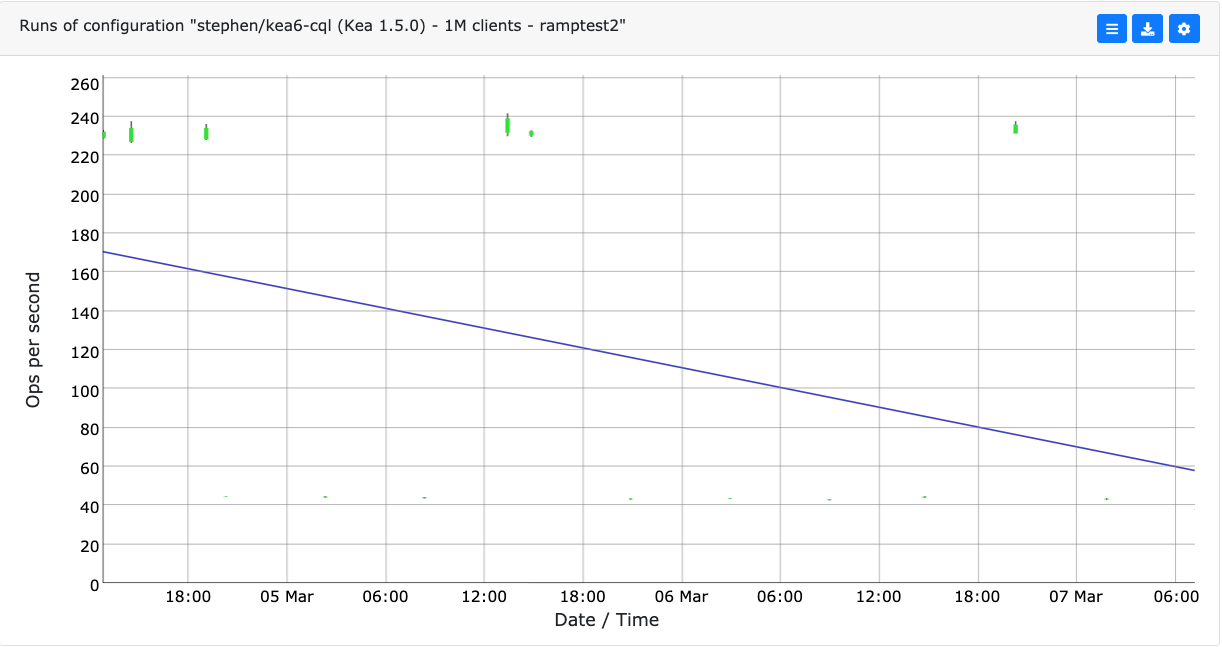

Cassandra - DHCPv6

No results are presented for Kea6 running with Cassandra because of very strange behavior exhibited during the tests. The above graph, showing a number of runs of perfdhcp with Kea6 and a Cassandra backend, illustrates this. In the runs plotted, the same version of the software was being run and the lease requests rate was being incremented in small steps; the graph shows results from the region where we would expect Kea to be saturated and the graphed lease rate stable or declining. Before every run the database was started and initialized; between tests within the run, the database was cleared; and after the run was complete, the database was stopped. The results however are distinctly bimodal: the reported performance for this lease rate is either around 230 leases/second or just over 40 leases/second, with very little variance in the latter result. There appears to be no pattern in the way the system locks into one mode or the other. (The graph was taken from perflab: the blue line is the attempt by perflab to fit a straight line to all the data points, most of which are not shown here.)

What makes this behavior puzzling is that if it is a Cassandra problem, why is it not seen when Kea/DHCPv4 runs with Cassandra? If it is a Kea problem, why is it not seen with Kea/DHCPv6 running with other database backends? At any rate, until this is resolved, it is felt that it is not possible to give reliable performance figures for this combination.

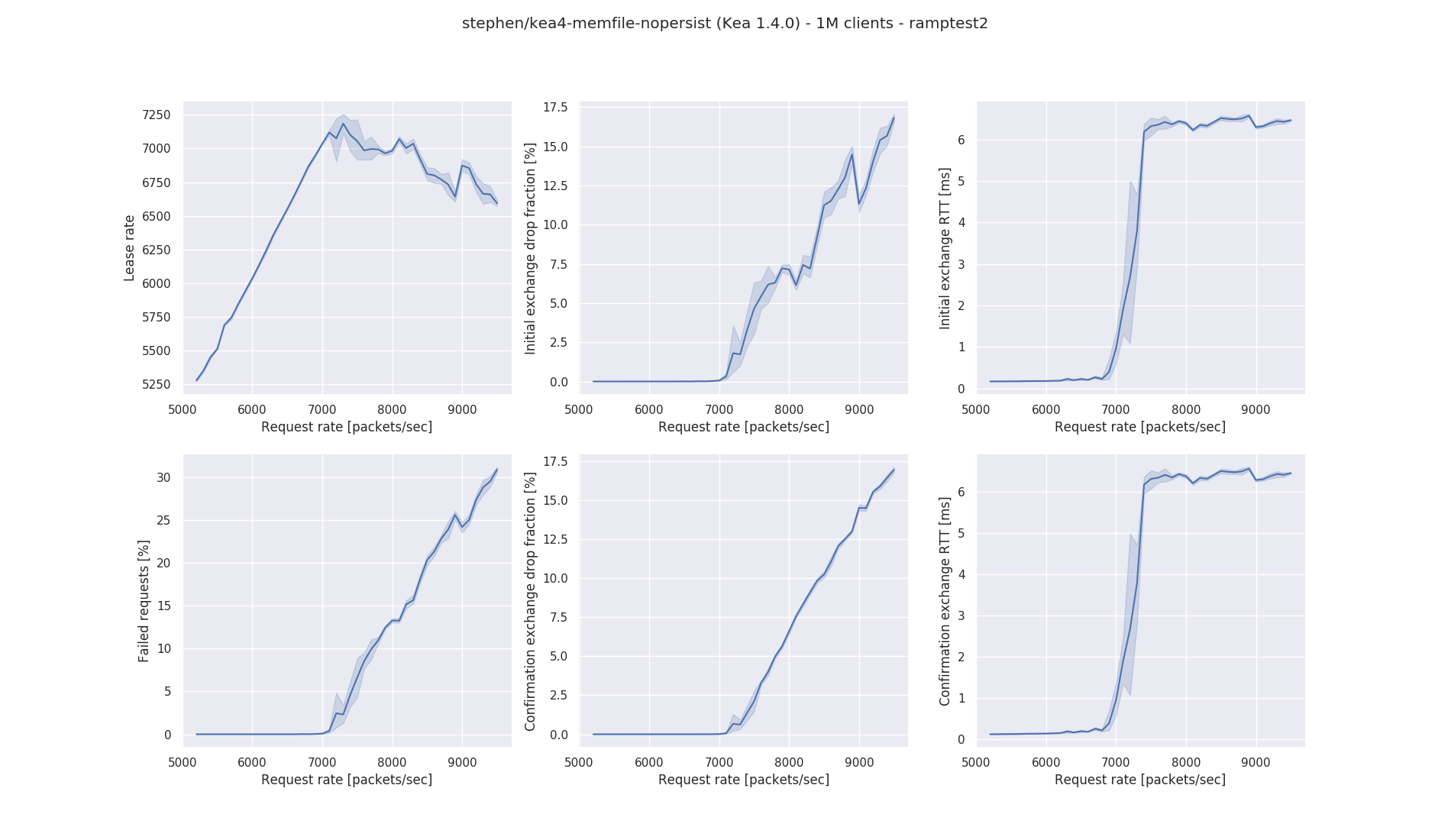

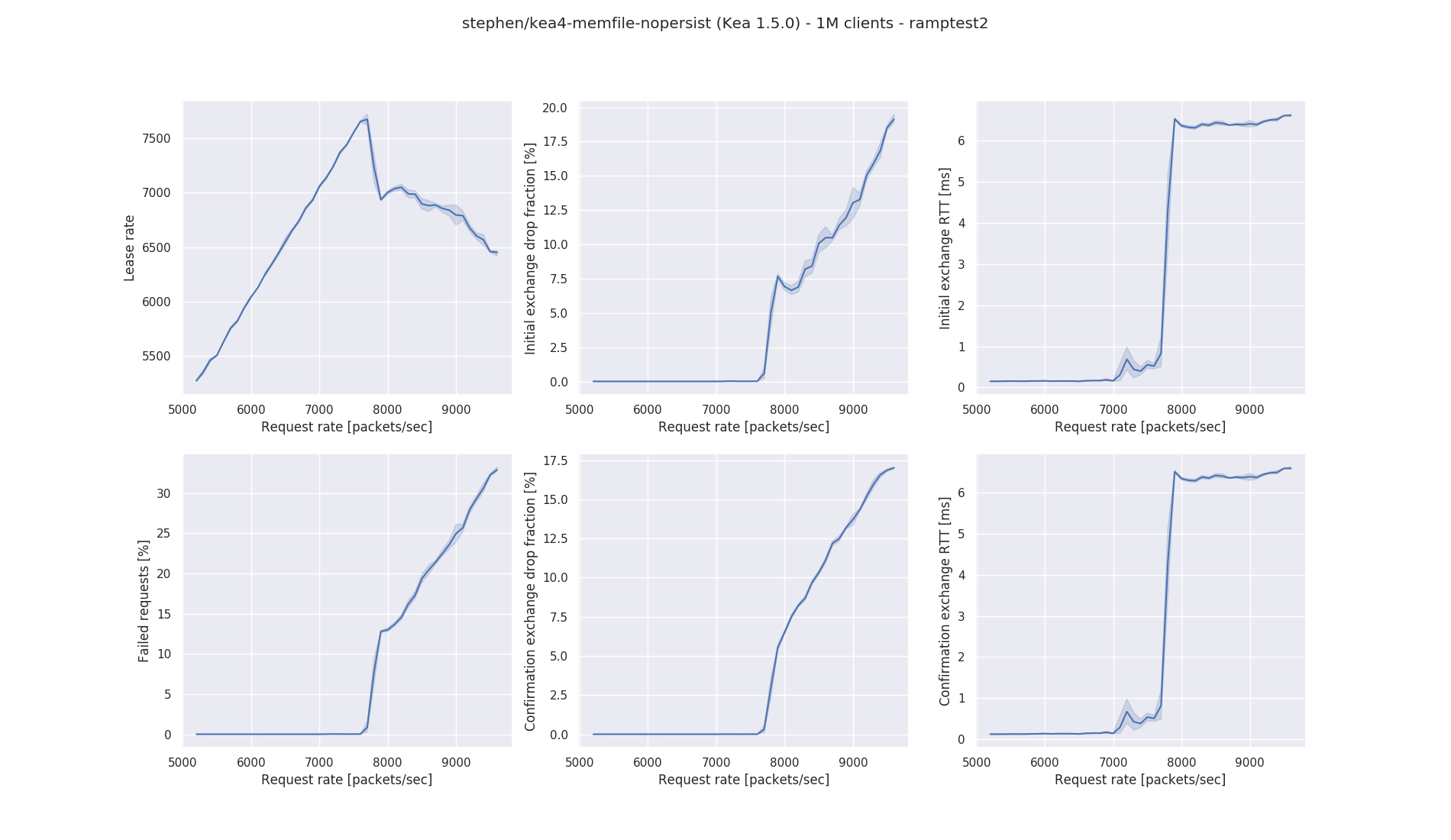

Memfile (no persistence) - DHCPv4

This section presents results for Kea running with a memfile backend, but not persisting the leases to disk. In this configuration, the data will be lost as soon as Kea is restarted or reconfigured. This scenario is ran mostly for internal purposes, as a benchmark for internal packet processing and allocation engine efficiency. It does not reflect most realistic deployments. The in-memory database is, of course, initialized when Kea is started. It is also cleared (with the "lease4-wipe" command) between each test.

The lease rate matches the packet rate up to a maximum, after which the lease rate falls. (The very sharp drop for Kea 1.5.0 might have been caused by a single anomalous point, as the request rate specified to perfdhcp was being incremented in steps of 200 for this configuration.). The reason for failed requests appears to be split more or less evenly between packets lost during the initial exchange, and packets lost during the confirmatory exchange.

Using the previous definition of maximum lease rate, the performance of Kea 1.4.0 is this configuration is about 7,100 leases/second and that for Kea 1.5.0 is 7,700 leases/second.

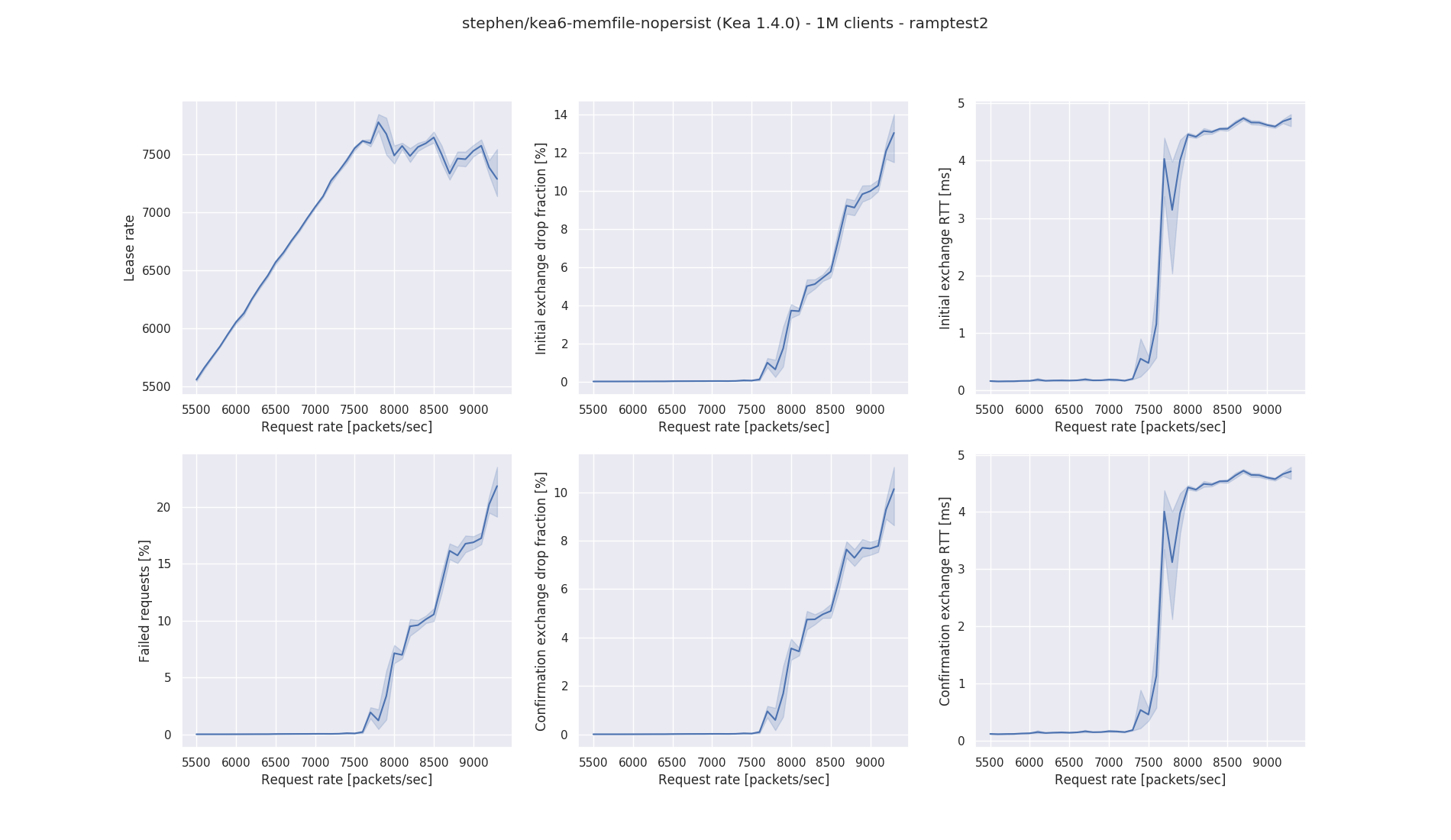

Memfile (no persistence) - DHCPv6

This section presents results for Kea running with a memfile backend, but not persisting the leases to disk. In this configuration, the data will be lost as soon as Kea is restarted or reconfigured. This scenario is ran mostly for internal purposes, as a benchmark for internal packet processing and allocation engine efficiency. It does not reflect most realistic deployments. The in-memory database is, of course, initialized when Kea is started. It is also cleared (with the "lease6-wipe" command) between each test.

The characteristics of Kea/DHCPv6 performance with a non-persistent memfile backend are similar to those for Kea/DHCPv4. The lease rate rises linearly with request rate to some maximum, after which it declines, very sharply in the case of Kea 1.5.0 (caused by the very sharp rise in dropped packets). As with the Kea/DHCPv4 case, the packet losses are more or less split equally between losses during the initial packet exchange and losses during the confirmatory exchange. Another similarity is that the performance of Kea 1.5.0 is better than that of Kea 1.4.0.

Using our definition of peak performance, the graphs indicate a performance of about 7,700/second for Kea 1.4.0 and about 8,150/second for Kea 1.5.0.

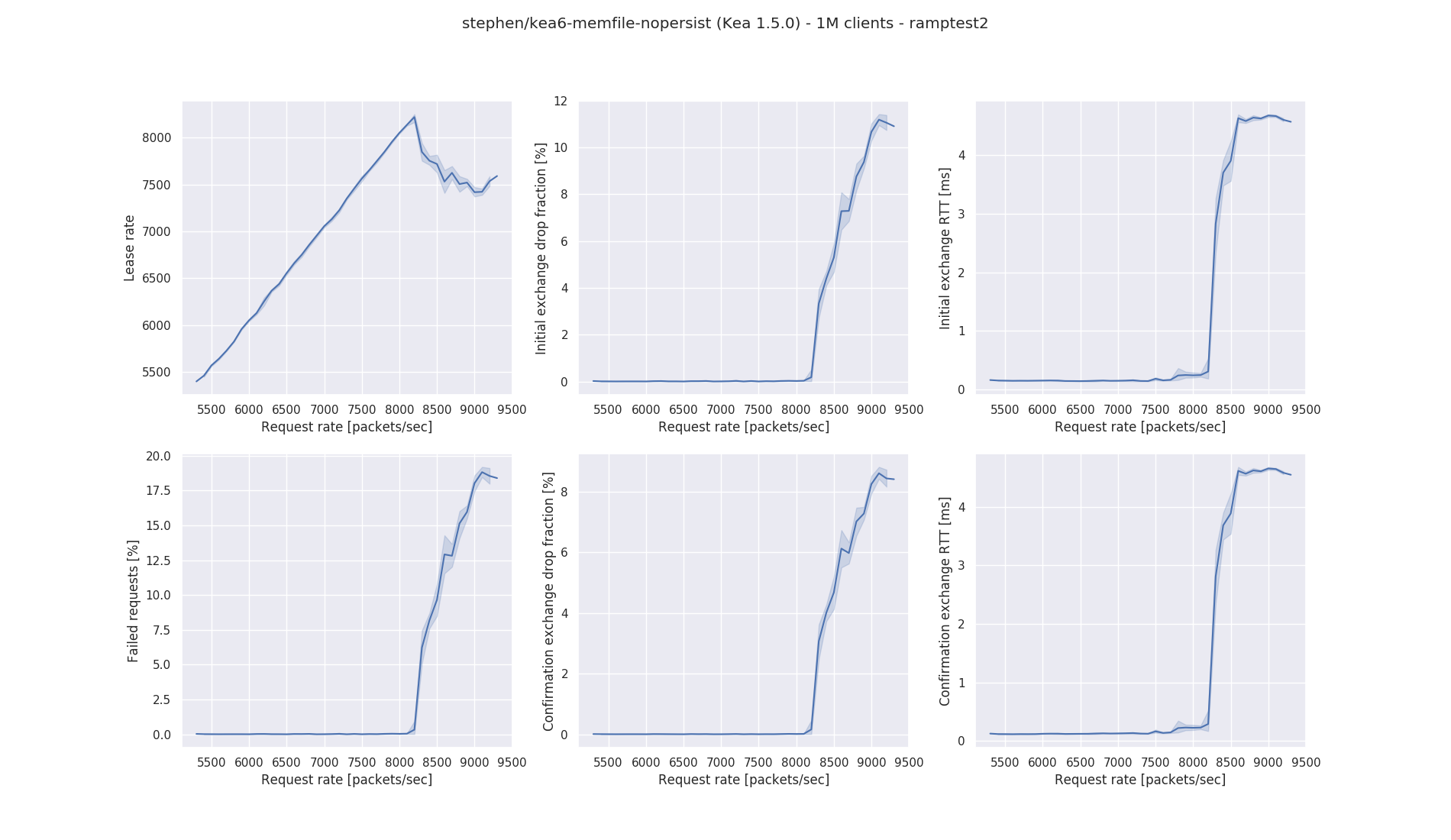

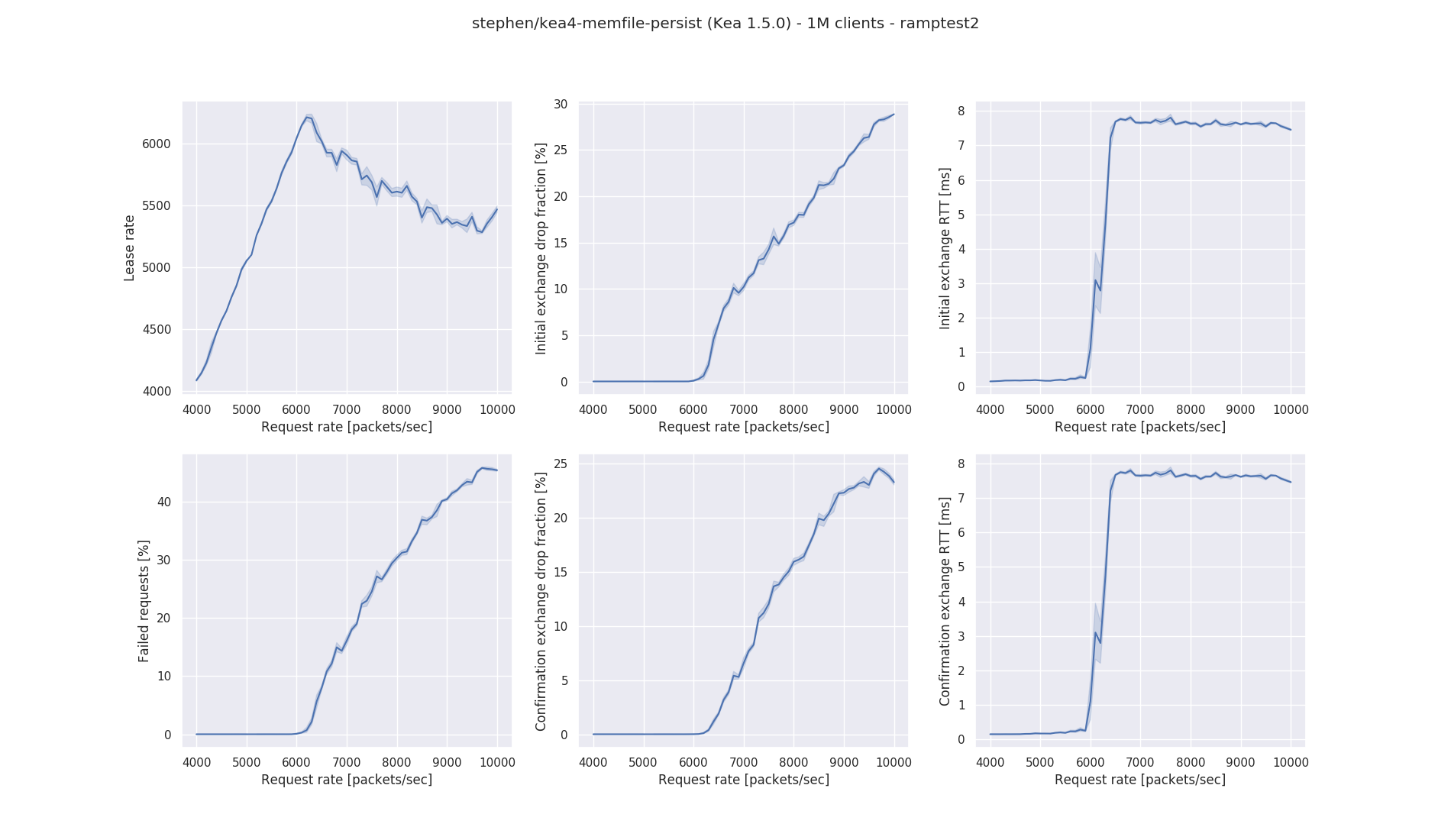

Memfile (persistence) - DHCPv4

This section presents results for Kea/DHCPv4 running with a memfile backend, and persisting the leases to disk. Kea starts with an empty database (no file) and writes the leases to disk as they are granted. The in-memory database is cleared (with the "lease4-wipe" command) between each test.

The characteristics of the configuration are similar to other memfile configurations, in that the lease rate reaches a peak and then declines. The effect of the additional activity of writing lease information (in text format) to disk can be seen in that the rates are lower than for the corresponding configuration without persistence. Also like the non-persistent configuration, dropped packets are evenly split between the initial and confirmatory packet exchange.

With the definition of peak lease rate as that at which the packet loss rate is 2.5%, the graphs indicate a figure of 6,000 leases/second for Kea 1.4.0 and about 6,200/second for Kea 1.5.0.

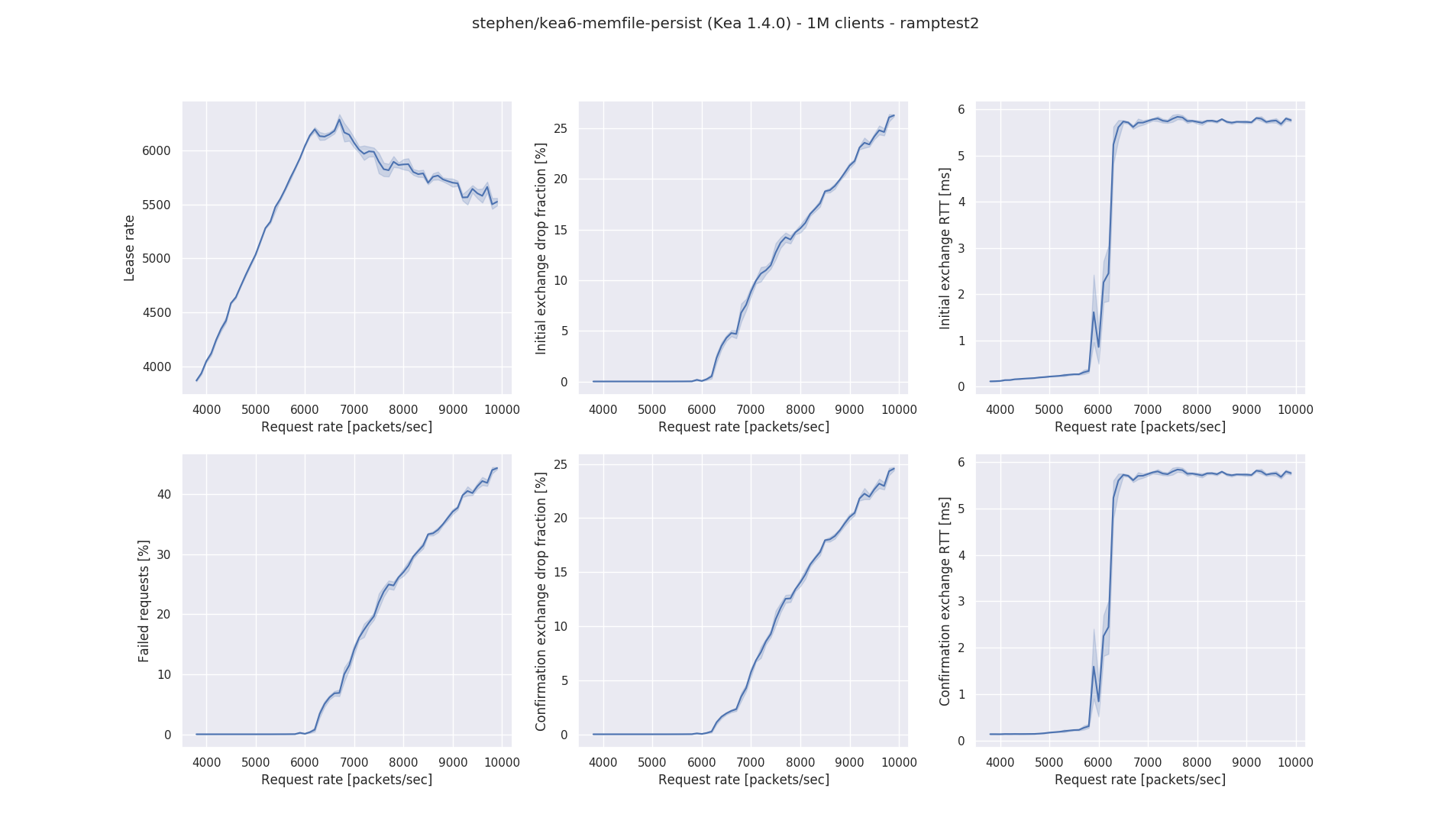

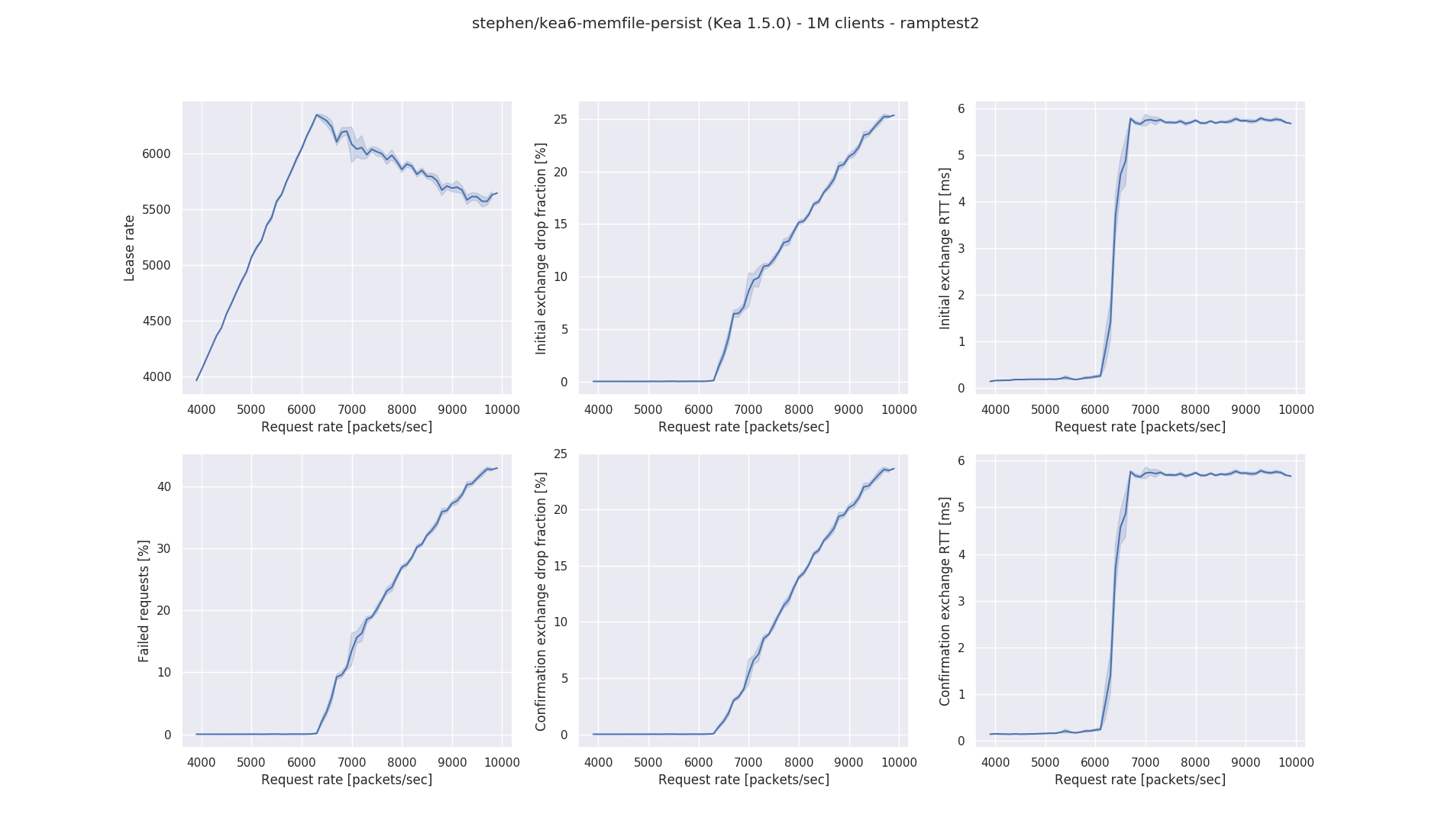

Memfile (persistence) - DHCPv6

This section presents results for Kea/DHCPv6 running with a memfile backend, and persisting the leases to disk. Kea starts with an empty database (no file) and writes the leases to disk as they are granted. The in-memory database is cleared (with the "lease4-wipe" command) between each test.

The curves have the same characteristics as the Kea/DHCPv4 persistent memfile configuration. Using the same definition of peak lease rate, we get figures of about 6,150 for Kea 1.4.0 and about 6,300 for Kea 1.5.0.

Summary

In the preceding sections, peak performance figures have been quoted. The definition used for the peak lease rate is that at which the packet loss rate is 2.5%, i.e. for a set of DISCOVER (SOLICIT) requests sent out by perfdhcp, 97.5% of them result in a lease being granted.

A summary of the results quoted are listed here. When using them, be aware that these figures are taken, by eye, from the (sometimes noisy) graphs above. As such, they are subject to a margin of error typical of such an approach. They should, however, be accurate to about +/- 10%.

Kea/DHCPv4 - Lease Rate (leases/sec)

| Configuration | Kea 1.4.0 | Kea 1.5.0 |

|---|---|---|

| MySQL | 510 | 504 |

| PostgreSQL | 785 | 780 |

| Cassandra | 185 | 190 |

| Memfile (nopersist) | 7,100 | 7,700 |

| Memfile (persist) | 6,000 | 6,200 |

Kea/DHCPv6 - Lease Rate (leases/sec)

| Configuration | Kea 1.4.0 | Kea 1.5.0 |

|---|---|---|

| MySQL | 540 | 537 |

| PostgreSQL | 800 | 790 |

| Cassandra | no measurement | |

| Memfile (nopersist) | 7,700 | 8,150 |

| Memfile (persist) | 6,150 | 6,300 |